You’re about to invest money in an influencer collaboration. The photos look sharp. The feed feels active. But something in the back of your mind asks,

- Connect the account: I send a simple consent link. They approve.

I’ve had that same doubt scrolling through pages that looked perfect on the outside.

Here’s what I learned: APIs give you the receipts. You can check how their audience grew, where those followers actually live, and whether the engagement matches up.

And the playground is massive. Approximately 5.41 billion people are currently on social media (DataReportal, July 2025). That’s why fraud slips in so easily. With the right influencer vetting tools, you can spot fake vibes before they burn your budget.

What Counts as Influencer Fraud Today

So, what does “fraud” even look like in influencer marketing right now? Let’s keep it simple.

- Fake followers: Purchased accounts that look real but never interact.

- Paid pods: Groups of creators liking and commenting on each other’s posts to pump numbers.

- Comment farms: Low-quality accounts dropping the same comments across dozens of posts.

- Giveaway loops: Temporary follower spikes from contests where people follow just to win a prize.

- AI-generated personas: Entire influencer profiles built by software instead of humans.

Why the API Approach Beats Manual Vetting

I’ve tried vetting with screenshots and spreadsheets. I’d zoom in, count comments, and make a call. It felt messy. I couldn’t tell what was real or old. After a few shaky picks, I transitioned to APIs, and the process fell into place.

With APIs, I get consented data pulled straight from the platforms. Identity, audience, engagements, everything in one place. Updates land on a schedule, so if a creator jumps by 20,000 followers in two days, I see it before I approve a spend. And the best part for me: every pull is saved.

I keep the request, the snapshot, and the thresholds. If someone asks, “Why did you pass or fail this creator?” I have the receipts.

How I set it up (you can copy this)

- Pull the basics: Profile, follower history, audience geo and language, post metrics.

- Set refresh rules: Weekly checks are the default, with daily checks applied if a campaign is live.

- Flag spikes: Growth velocity, geo mismatch, odd comment patterns.

- Store everything: JSON snapshots with timestamps and the decision I made.

- Review edge cases: If a profile appears mixed, I conduct a quick manual review before confirming.

That flow keeps me calm. I spend on creators with real reach, and I can show my work if anyone audits the campaign.

The Data Signals to Pull (and the Red Flags to Watch)

When I began using APIs for influencer checks, I realized the real power lies in identifying patterns across various signals. Think of it like piecing together a puzzle; you fetch one piece at a time, and the picture gets clearer. Here’s what I focus on.

Follower growth timeline

The growth chart is my first stop. Numbers don’t lie when you track them over time. I grab profile data and follower history with an API and look for patterns. A smooth climb is usually organic. However, if I see a sudden surge within 24–72 hours, my eyebrows go up.

Here’s what I’ve noticed:

- Steady growth = healthy community.

- Sawtooth patterns = giveaway loops that fade as fast as they start.

- Spikes from irrelevant countries = bought traffic.

I once checked a fitness influencer who gained 12K followers in two days, all from a region far outside her audience. Reach and impressions stayed flat. That was the red flag that saved me from a costly collab.

Engagement quality (not just rate)

People love quoting engagement rate, but trust me, it’s not the whole story. I pull post-level data: likes, comments, impressions, and saves. Then I compare how those pieces fit together.

High ER can be hype or fakery. I once saw an account with 4% ER, but comments were all “🔥🔥🔥” from the same few profiles. Real engagement has variety, questions, jokes, and even light trolling. That mix is what I trust.

Audience geography & language

This one always feels like a vibe check. If a creator posts in English but 70% of their followers are from unrelated regions, something’s sus. APIs give me the country breakdown, and I line it up with the content.

Questions I ask myself:

- Do the locations match the creator’s niche?

- Does the timing of new followers line up with when they post?

- Are language settings consistent with the captions?

I once spotted a burst of followers landing at 3 a.m. local time from a single country. That cluster didn’t match the creator’s audience at all. Without the API pull, I’d have missed it.

Audience authenticity & quality

Here I go deeper into follower health. APIs fetch account age, bot scores, and profile completeness. An authentic audience is diverse, encompassing different ages, posting habits, and engagement levels. Fake ones look copy-pasted.

Red flags I keep an eye out for:

- Zero-post followers stacked in bulk.

- Usernames with lazy repeats like “alex1234” or “user5678.”

- Waves of new accounts were created in the same week.

TikTok itself admitted to removing tens of millions of fake accounts in its quarterly sweeps. That’s proof the problem is live, not theory. If a profile’s audience leans too heavily on low-quality signals, I step back.

Comment semantics

Comments tell me if an audience is actually vibing. When I pull text and timestamps, I look for variety. Real fans say things like, “Where did you get that jacket?” or “This is hilarious.” Fake ones drop cookie-cutter lines.

Signs that make me side-eye an account:

- Dozens of “Great post!” clones.

- Emoji-only chains that add zero context.

- Big bursts of comments are landing in the same minute.

I once caught an influencer with over 200 comments on a post, but 90% of them were simply “✅” and “Wow.” That’s not community, that’s noise.

Creator history & disclosures

The past always leaves clues. APIs enable me to scan older posts for sponsored tags, disclosure markers, and posting cadences. I check if creators stay consistent or if they suddenly flip to promo-heavy feeds.

Here’s how I rate disclosure history:

- Clean: Ads are tagged, and the content feels natural.

- Messy: Disclosures missing or inconsistent.

- Sketchy: Posts disappear after backlash or brand challenges.

In 2025, the NAD flagged several creators for failing disclosure standards, and it wasn’t pretty. When I see gaps like that, I think twice. A creator who respects their audience and follows the rules is safer for long-term brand work.

How to Run a Fake Follower Check API (Step-by-Step)

When I first set this up, I wanted something simple: a process I could repeat for every creator without second-guessing. Over time, I refined it into a workflow that feels like a checklist. Here’s how you can run it too.

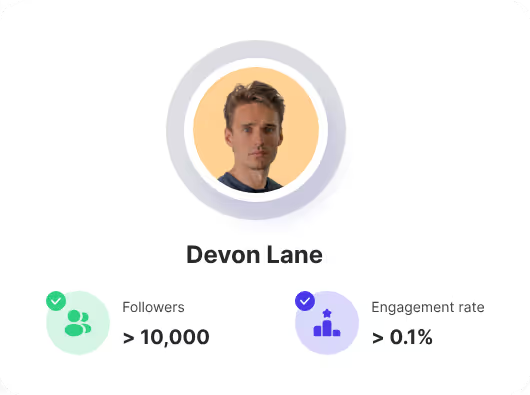

Step 1: Verify identity & fetch base profile

Before you dive into fancy signals or fraud scores, you need to know one thing: Is this even the correct account? I learned this when I once pulled data from a fan account, thinking it was the actual creator. Total facepalm.

That’s why Step 1 focuses on the basics. With the Identity/Profile endpoint, you pull:

- Username and handle

- Bio and profile picture

- Follower count

- Platform ID (a unique number that proves the account is authentic)

These four details indicate whether the creator has connected their account through consent and whether I’m analyzing the actual page, not a clone. A clean pull feels like getting the keys before starting the car; you know you’re in the driver’s seat.

When I get this info, I usually ask myself three quick questions:

- Does the handle match the name the creator gave me?

- Does the bio align with their pitch (e.g., fashion influencer actually posting fashion)?

- Does the platform ID confirm it’s the original account?

It sounds basic, but skipping this step can burn hours later. Once, I vetted a creator who appeared perfect, only to discover that I had checked a backup account instead of their main one. That mistake cost me a campaign slot. Since then, I haven’t moved forward without Step 1.

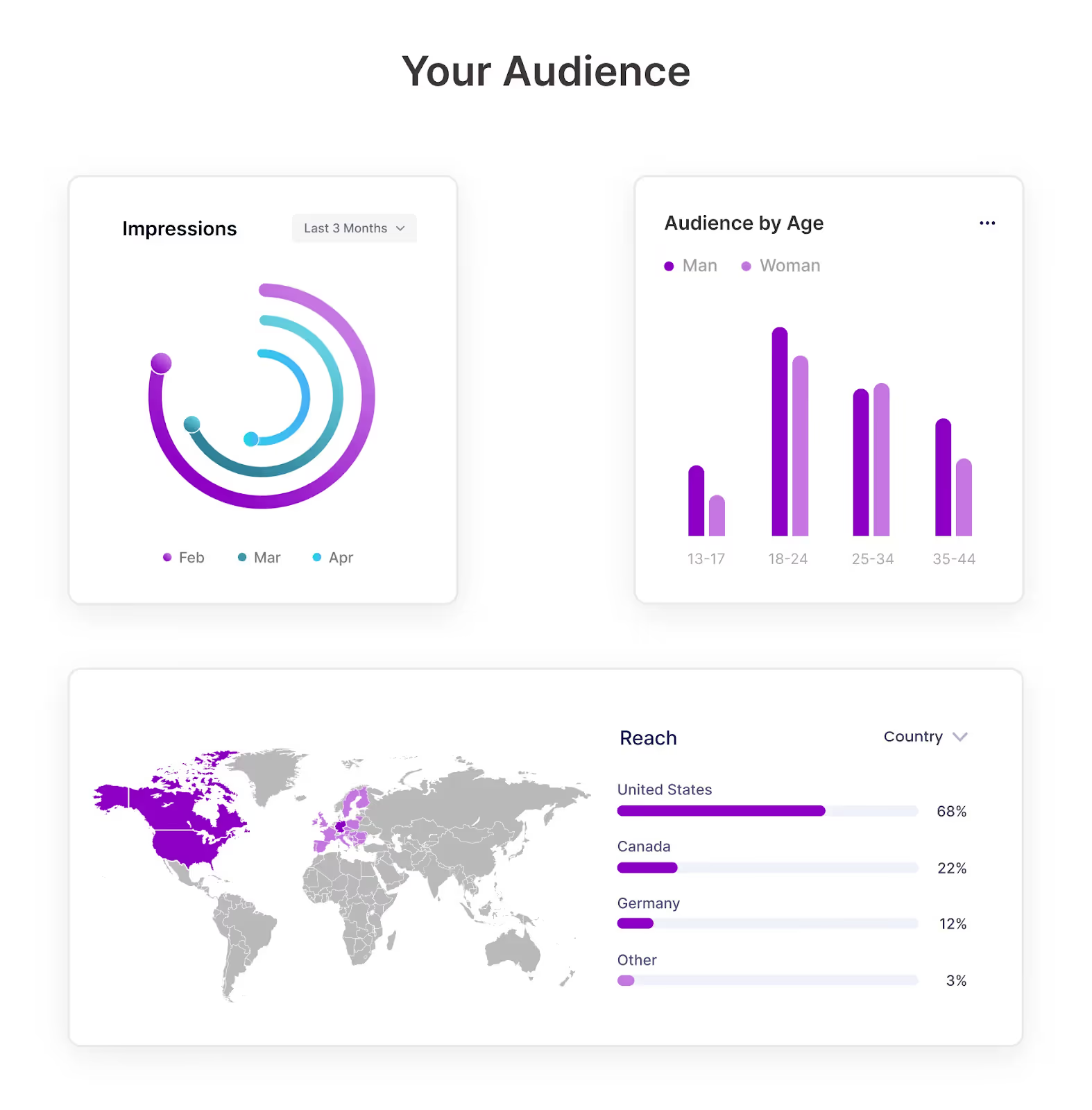

Step 2: Pull audience demographics

Once the base profile is set, I want to know who’s actually behind the follower count. Numbers alone don’t mean much; you need to peek under the hood. That’s where the Audience endpoint comes in.

This pull usually gives me:

- Country and region percentages

- Language mix

- Gender and age splits (if the platform provides them)

Why does this matter? Because alignment is everything. If an influencer’s content is in English but 65% of their audience is from a region that doesn’t usually consume English content, it feels sus. Not always fraud, but worth checking.

Here’s how I line things up:

I once worked with a food creator based in Los Angeles. On the surface, she looked fit. However, when I analyzed her demographics, more than half of her followers were from countries far outside her niche. The content didn’t add up, and I passed. That decision probably saved me from paying for impressions that wouldn’t lead to customers.

The lesson: audience breakdowns aren’t just numbers; they’re vibe checks on whether the creator and your market actually align.

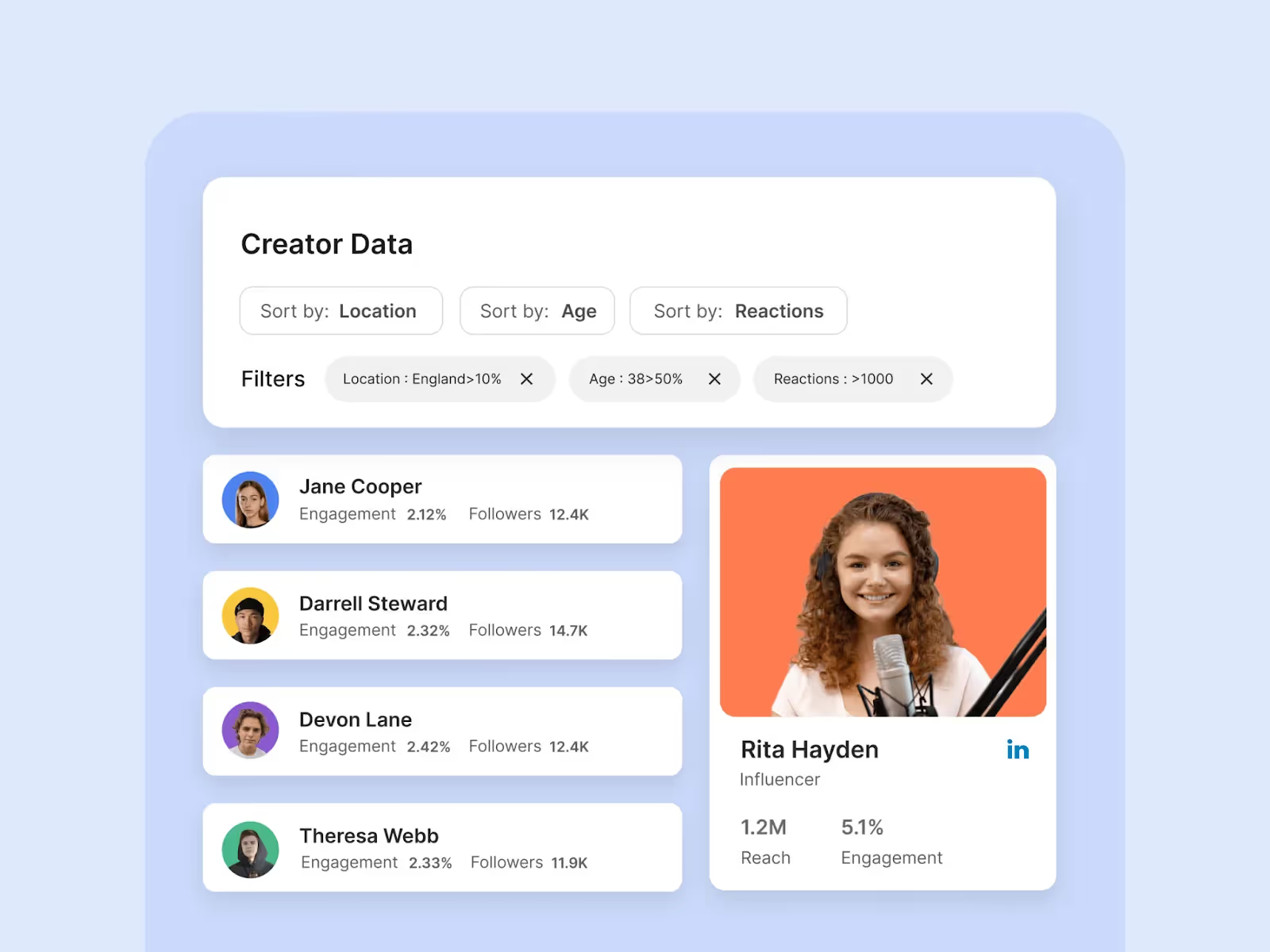

Step 3: Pull recent content & engagements

This is my favorite step, because it shows how people actually interact with the creator’s work. I fetch the last 10–15 posts using the Content/Engagement endpoint.

That gives me impressions, likes, comments, and saves for each post.

From there, I build rolling engagement ratios. A single viral post can mislead you, but a series of posts shows the trend. I like to graph it out, post by post, engagement rate vs. reach. You start to see if the account is thriving or if it’s smoke and mirrors.

Here’s a checklist I keep for myself:

- Likes vs. impressions: Are likes proportional to reach?

- Comments vs. likes: Are people talking, or just tapping the heart button?

- Saves: Do followers care enough to save content for later?

- Consistency: Are these numbers steady, or do they swing wildly?

Step 4: Compute risk signals

Now comes the detective work. You have the raw data; it’s time to turn it into actionable signals. Think of this as grading the account.

The main things I look at:

- Growth velocity: Is it slow and steady, or does it spike like a rollercoaster?

- Geo mismatch: Does the audience location make sense for the content?

- Comment duplication: Are comments diverse, or do they all look copy-pasted?

- Engagement outliers: Do their rates fit benchmarks for their size?

Step 5: Decide & log

At the end of the workflow, you need to make a call. Either the influencer passes the check, or they don’t. But here’s the part that matters most: logging everything.

I save the JSON snapshots, thresholds, and notes for every pull. That way, if someone asks later why I passed or rejected a creator, I can show the receipts. This has bailed me out more than once when a brand partner questioned my choices.

Here’s how I frame it for myself:

- Low risk: Numbers are clean, audience aligns, engagement looks natural → Shortlist.

- Medium risk: A few odd signals, but worth a manual review → Put on hold.

- High risk: Major red flags in growth, geo, or engagement → Reject.

I also set reminders to re-run checks. A creator who looks clean today might drift in three months. By logging and scheduling, I can stay ahead of the game.

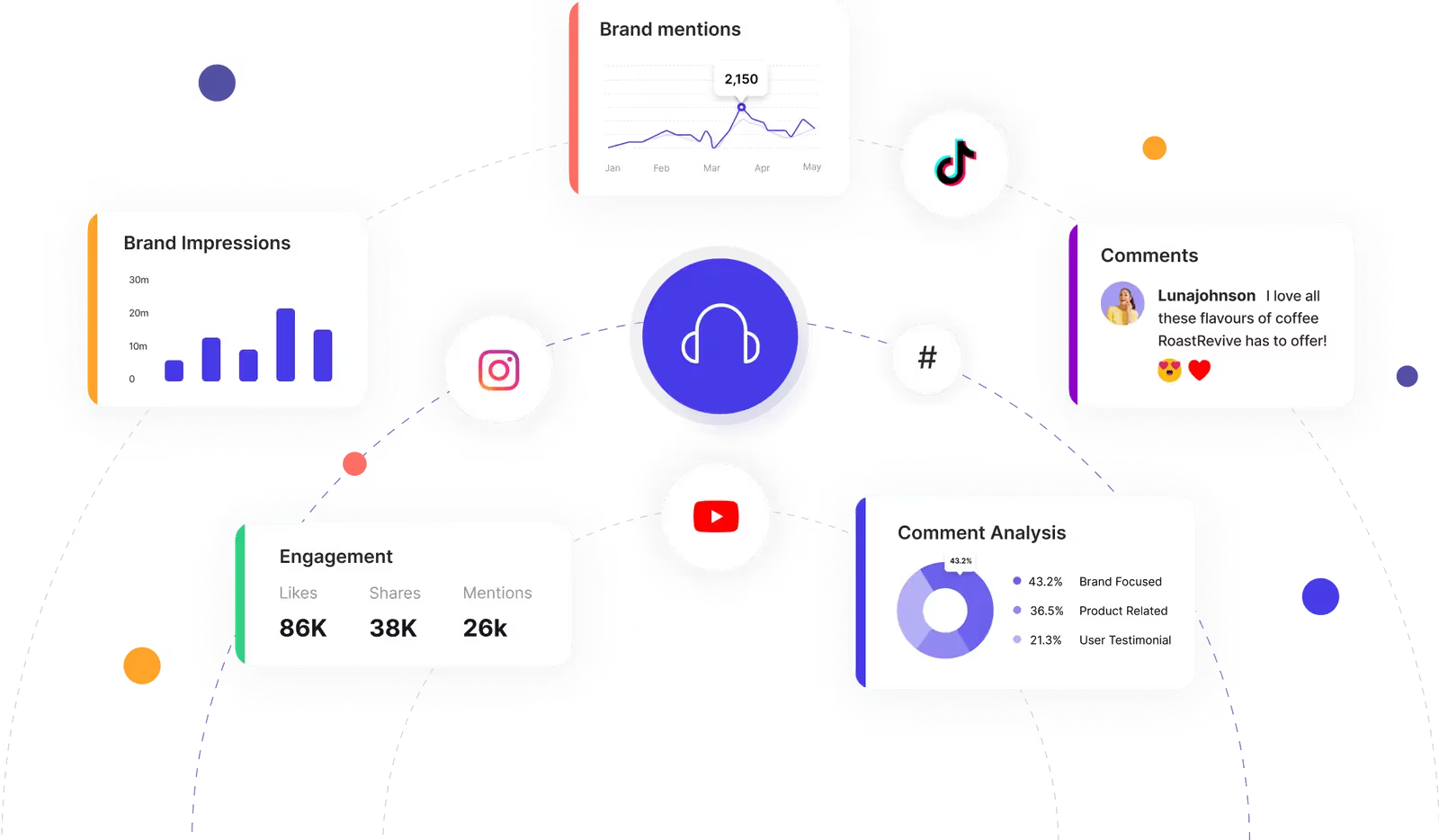

Upgrade Your Influencer Vetting Tools with Fake Follower Check APIs

You’ve seen the playbook. Fraud appears as fake followers, pods, comment farms, giveaway spikes, and even AI-generated personas. APIs cut through that noise.

You pull real data from the source, read the growth curve, check where the audience lives, scan comment quality, and review disclosure history. One flow. Fewer surprises.

Why I trust this approach:

- I see patterns, not just snapshots.

- I catch spikes before they spend.

- I keep receipts for audits and brand safety.

- I can scale checks without losing control.

If you want fewer headaches and cleaner results, move your vetting to APIs. I use Phyllo because it turns vetting into a repeatable system, not a guess.

Ready to run it your way? Sign up for free on Phyllo and launch your first fake follower check today.

FAQ:

What is a “fake follower check API”?

A set of endpoints that pull verified profile, audience, and engagement data from source platforms. You analyze growth, geography, and comment patterns to spot suspicious signals before you hire an influencer at all.

Which signals catch the most fraud?

Growth spikes in 24–72 hours, audience geography or language that doesn’t match the content, copy-paste comments, engagement rates far outside size benchmarks, and many low-quality or incomplete follower profiles showing up together.

How often should we re-check creators?

Run checks before shortlisting, again before contract, and during the campaign. Every 2–4 weeks works for most teams. Additionally, trigger rechecks when new posts go live using webhooks, allowing you to catch shifts quickly.

Will this block great creators with global audiences?

Use thresholds as guardrails, not hard walls. Review context for each creator. If their audience mix aligns with your goals and target regions, approve them. Add a quick manual read for edge cases to keep strong creators in play.

.avif)